Our previous work

in healthcare

Over the years we have worked with quite a variety of organisations. What they had in common? The wish to do their work better and smarter. With data, machine learning and AI.

Read below how we helped organisations in healthcare!

Read on!keyboard_arrow_rightBack to all projectskeyboard_arrow_right"No robots at the bed, please"

Reducing workload and waiting lines

The first thing that comes to mind when talking about 'AI-support in healthcare' is often the robot at the hospital bed. The machines that help changing sheets, bring tea, perform measurements, etc. But let those be the things that patients prefer to be done by people.

By letting AI help with the administration

Tasks that AI is particularly good in, and which most healthcare workers don't really enjoy, are the administrative tasks. These usually concern repetition ánd a certain level of standardisation. Besides that there are a lot of patients that generate a lot of data. Ideal for AI.

And recognizing patterns

Computers are a lot better than people at doing tasks quickly and recognizing patterns in large amounts of data. These strengths you can utilise to enhance and expand your domain expertise.

Whether it concerns deriving diagnoses from texts, finding the right treatment for a patient or predicting spikes in the workload: AI can help you with this.

In a safe way

And yes, this can indeed also be done with sensitive data in patient dossiers. In order to do this, we have developed a pseudonymisation tool that is specialised in (Dutch) healthcare texts and we use Federated Learning to learn from data from different hospitals, without moving the data.

Healthcare | Privacy | NLP

Labeling hundreds of thousands medical case reports automatically

Hospitals in the Netherlands are required to assign universal diagnosis codes to diagnosed patients, in order to report to the Health Authority (NZa) and Statistical Bureau (CBS). These hospitals hire specifically trained staff to do this code assigning. This – often boring – human work costs a lot of time and is therefore also prone to errors.

DHD wanted to find a way to support hospitals and labelers in this laborious task. For instance a tool that automatically processes 'easy cases' and supports coders with the harder ones.

Using AI, without privacy violations

This is possible with AI, since millions of patient dossiers (examples) have been labeled before, from which can be learned. These examples, however:

- contain quite some sensitive patient and doctor data, and

- are spread over a lot of hospitals, who can't just simply share their data.

That is why we have used:

to develop a tool that can recognise what ICD-10 codes are applicable to patient dossiers from the free text in e.g. discharge letters and OR reports.

Saving time with certainty and explainability

When developing AI models, you should make sure that they are learningthe right things. Especially when it concerns sensitive applications, you should make sure the AI makes only few mistakes. And that you understand why it makes what decisions. Only then you can fully automate tasks. Which you should not always want.

Given the requirement that it should be at least 80% certain, our AI model (algorithm) was able to automatically assign over 30% of the codes. This might sound low, but imagine that you could already save a third of your work time!

For cases where the AI's certainty was below this very high threshold, the algorithm can give a top 5 of codes most likely to match the case (with explanation!), to drastically reduce the time required to manually assign the label.

Healthcare | Sensor Data

Sensordata in clinical research

How can we use wearables in clinical research? Our client frequently does medical research on test subjects and wants to utilise sensor data for clinical studies, and be able to process the huge amounts of data that these sensors generate.

What did we do?

- Examined sensor data for usability

- Helped design a study for clinical validation of sensor-based technology

- Strategic advice in setting up a Big Data environment and tooling

Based on our results a clinical trial was started in which the principal objective is to automatically classify patients of a certain disease from healthy controls.

Together with this client we are developing AI models that can directly diagnose patients from sensor data.

Pseuduck | Pseudonymising | Anonymising | Privacy

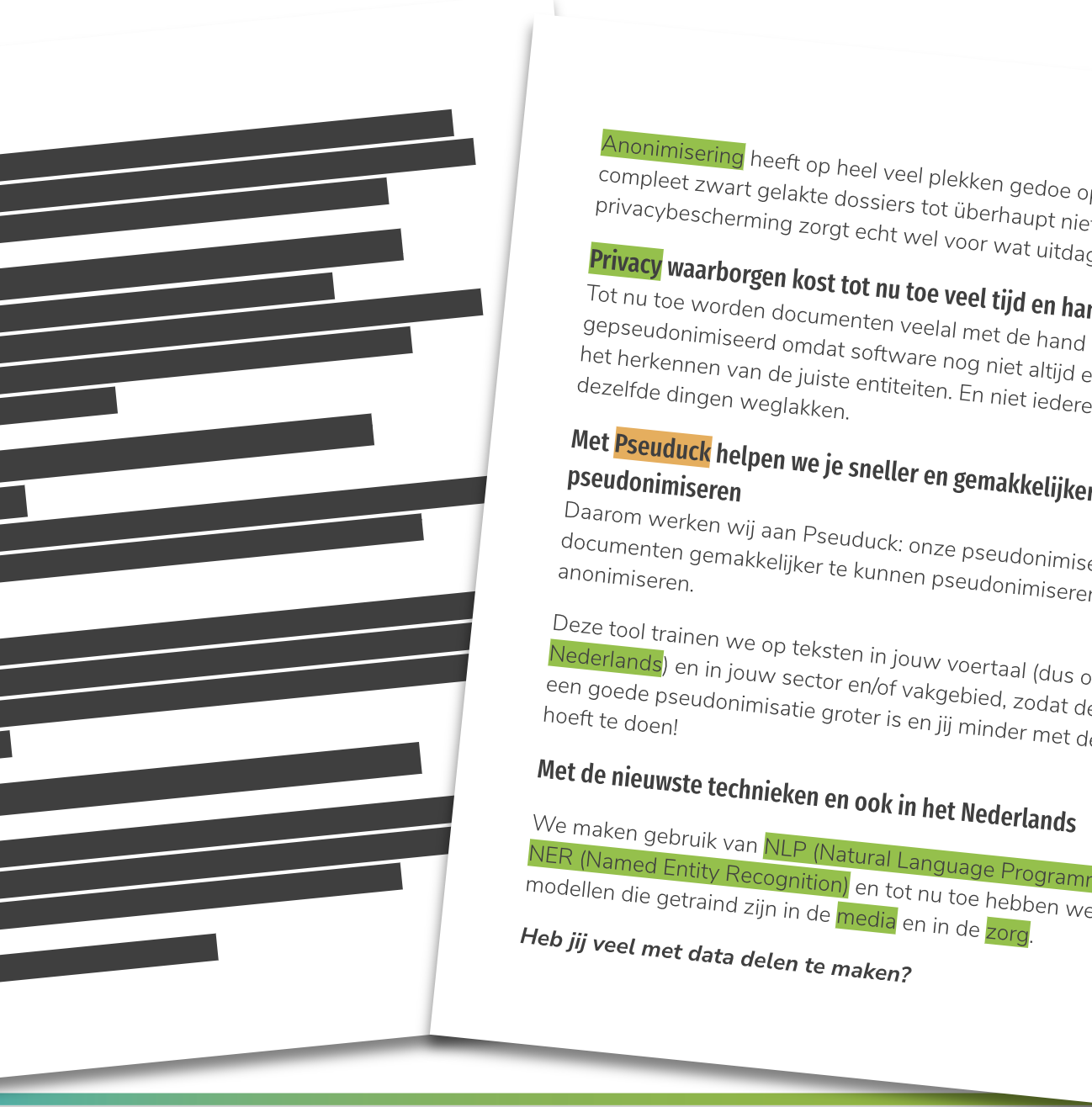

Ensuring privacy when sharing data

'Just sharing data or documents' is not that simple. Often it contains personal and private information. So if you want to comply to the GDPR, you will often need to put some time and effort into anonymising or pseudonymising the data, before you can work with it.

Today, most anonymisation work is done by hand. This a lot of work, costs quite some time and is – like any other crappy job – prone to errors. Especially when it concerns free text!

Pseuduck to the rescue for GDPR proof texts

Pseuduck is a pseudonymisation tool developed by us that makes it easier to make your free texts – ranging from small text fields to large (collections of) documents – GDPR proof.

The benefits of Pseuduck are that we can teach it to specialise further in different fields and sectors, to pseudonymise or anonymise your texts, using your jargon and according to your needs and requirements.

What sensitive texts do you have to work with?

Contact us for a trial!keyboard_arrow_right

Selectical | Literature Research | NLP | Active Learning

Literature research in 1/3 of the time

Researchers doing (systematic) literature reviews need to screen many thousands research papers and select the ones that are relevant to a specific subject. Usually out of these thousands of papers, only a few hundred are actually useful. Making this selection manually takes a lot of useful time and is simply boring.

Without pre-labeled data

Usually we train an AI model using labeled data. Using examples. But that is not possible in this case! The challenge is that since every literature study is different, there are no examples yet. Labeling these examples just so happens to be your job. That doesn't mean that you can't use AI though!

And still use AI

For this we use a technique called Active Learning: this type of AI learns from human input, and can improve itself constantly while working. This way the researcher can start working the same way as usual, but with an AI learning in the background. Once the AI is certain enough that it has identified the right patterns, it will take over the labeling. This way the researcher only has to validate or correct the AI's work. This saves a lot of time!

Read more about Selecticalkeyboard_arrow_rightAsk for a demo!keyboard_arrow_right