Our previous work

Over the years we have worked with quite a variety of organisations. What they had in common? The wish to do their work better and smarter. With data, machine learning and AI.

Read below how we helped them!

Scroll through all projectskeyboard_arrow_rightHealthcarekeyboard_arrow_rightIndustrykeyboard_arrow_rightAI productskeyboard_arrow_rightLet our previous work inspire you to take your next step with data and AI. Want to start tackling your own case now?

Selectical | Literature Research | NLP | Active Learning

Literature research in 1/3 of the time

Researchers doing (systematic) literature reviews need to screen many thousands research papers and select the ones that are relevant to a specific subject. Usually out of these thousands of papers, only a few hundred are actually useful. Making this selection manually takes a lot of useful time and is simply boring.

Without pre-labeled data

Usually we train an AI model using labeled data. Using examples. But that is not possible in this case! The challenge is that since every literature study is different, there are no examples yet. Labeling these examples just so happens to be your job. That doesn't mean that you can't use AI though!

And still use AI

For this we use a technique called Active Learning: this type of AI learns from human input, and can improve itself constantly while working. This way the researcher can start working the same way as usual, but with an AI learning in the background. Once the AI is certain enough that it has identified the right patterns, it will take over the labeling. This way the researcher only has to validate or correct the AI's work. This saves a lot of time!

Read more about Selecticalkeyboard_arrow_rightAsk for a demo!keyboard_arrow_right

Healthcare | Privacy | NLP

Labeling hundreds of thousands medical case reports automatically

Hospitals in the Netherlands are required to assign universal diagnosis codes to diagnosed patients, in order to report to the Health Authority (NZa) and Statistical Bureau (CBS). These hospitals hire specifically trained staff to do this code assigning. This – often boring – human work costs a lot of time and is therefore also prone to errors.

DHD wanted to find a way to support hospitals and labelers in this laborious task. For instance a tool that automatically processes 'easy cases' and supports coders with the harder ones.

Using AI, without privacy violations

This is possible with AI, since millions of patient dossiers (examples) have been labeled before, from which can be learned. These examples, however:

- contain quite some sensitive patient and doctor data, and

- are spread over a lot of hospitals, who can't just simply share their data.

That is why we have used:

to develop a tool that can recognise what ICD-10 codes are applicable to patient dossiers from the free text in e.g. discharge letters and OR reports.

Saving time with certainty and explainability

When developing AI models, you should make sure that they are learningthe right things. Especially when it concerns sensitive applications, you should make sure the AI makes only few mistakes. And that you understand why it makes what decisions. Only then you can fully automate tasks. Which you should not always want.

Given the requirement that it should be at least 80% certain, our AI model (algorithm) was able to automatically assign over 30% of the codes. This might sound low, but imagine that you could already save a third of your work time!

For cases where the AI's certainty was below this very high threshold, the algorithm can give a top 5 of codes most likely to match the case (with explanation!), to drastically reduce the time required to manually assign the label.

Industry | Insights | Prediction

Better management of a massive mining operation

RAG, formerly Germany's largest coal mining company, has to pump tonnes of water out of old mineshafts every day. The exact process of which water flows into the mine and when is not well understood. Landscape has built a model using real world data to predict this amount of water months in advance. This also indicates the required amount of pumping,which enables RAG to improve their resource management!

Read all about itkeyboard_arrow_right

Industry | Operations Optimisation

Teaching an AI to run a power plant

The same goes for everyone: the longer or more often you do something, the better you get at it. You experience different situations and contexts, and subsequently know how to act on these.

People are quite quick, learning these things with only a few examples. But computers are definitely better at recognizing patterns. Especially if it concerns many variables.

For operating a power plant the same is true. We know quite well what needs to happen when, but could we become even better at managing energy? Could we detect and defer potential anomalies sooner? Could we make our operations more sustainable? And could an AI discover this?

With Digital Twins and Reinforcement Learning

Bingo! Hype word collection complete.

But it ís true. Together with EKu.SIM – who develops Digital Twins for critical infrastructures – we are researching how we can become better at operating power plants by training an AI 'freely' on many simulations. Since in this sector little improvements can already make a big difference!

Culture | Prediction

Predict the visitors

How full will the park be? How many guests can we expect this week? A zoo wanted to be able to predict what the busy and slow hours would be the following weeks.

Based on historical data

In order to do this, we:

- Combined their historical data with datasets like the weather, holidays, events around town, etc.

- Examined which factors had the largest impact on visitor numbers

- Developed an AI model that predicts the amount of visitors per hour

- Represented the historical data, the predictions and the influencing factors in a user friendly dashboard

To improve planning

This AI is able to make predictions for how many visitors the park will receive by hour, two weeks in advance. These numbers are split by the type of guest, like card holders – who enter for free, tourists from abroad or groups of schoolchildren.

And understand your customers even better

Not only is it nice to be able to plan better and thus prevent big surpluses and deficits: by discovering patterns in your data, you can also get new insights. Of course you know your customer like no other, but we have seen that the insights from data science can often still surprise even the experts. Nice bonus!

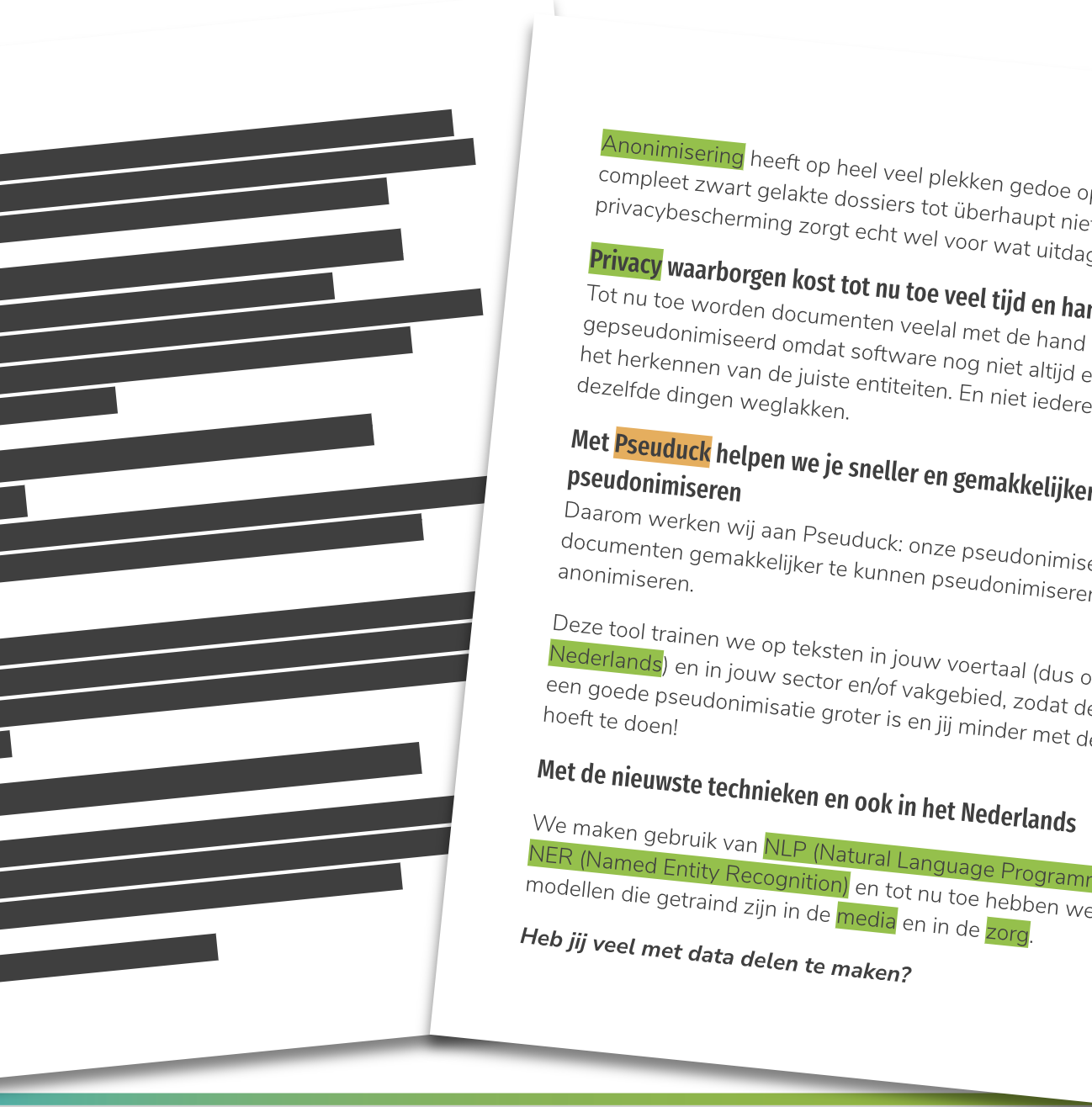

Pseuduck | Pseudonymising | Anonymising | Privacy

Ensuring privacy when sharing data

'Just sharing data or documents' is not that simple. Often it contains personal and private information. So if you want to comply to the GDPR, you will often need to put some time and effort into anonymising or pseudonymising the data, before you can work with it.

Today, most anonymisation work is done by hand. This a lot of work, costs quite some time and is – like any other crappy job – prone to errors. Especially when it concerns free text!

Pseuduck to the rescue for GDPR proof texts

Pseuduck is a pseudonymisation tool developed by us that makes it easier to make your free texts – ranging from small text fields to large (collections of) documents – GDPR proof.

The benefits of Pseuduck are that we can teach it to specialise further in different fields and sectors, to pseudonymise or anonymise your texts, using your jargon and according to your needs and requirements.

What sensitive texts do you have to work with?

Contact us for a trial!keyboard_arrow_right

Healthcare | Sensor Data

Sensordata in clinical research

How can we use wearables in clinical research? Our client frequently does medical research on test subjects and wants to utilise sensor data for clinical studies, and be able to process the huge amounts of data that these sensors generate.

What did we do?

- Examined sensor data for usability

- Helped design a study for clinical validation of sensor-based technology

- Strategic advice in setting up a Big Data environment and tooling

Based on our results a clinical trial was started in which the principal objective is to automatically classify patients of a certain disease from healthy controls.

Together with this client we are developing AI models that can directly diagnose patients from sensor data.

Industry | Data Management

Only save the data you really need

Airborne Oil & Gas, a pipeline manufacturer, wanted to equip their factory line with sensors and cameras, to gather data about their production process. This data can be used to model a digital twin, a virtual copy of the factory, and to train AI models to do various tasks.

But before that could happen, they first needed to store vast amounts of data that these sensors would generate. They asked us to determine the needs for storage, what data to acquire and optimize the amount of data stored.

Read all about itkeyboard_arrow_right

Business Services | Image Recognition

All rooftops in the Netherlands measured and categorised

How high is a building? What type of roof does it have? The client, a data aggregator, wanted a dataset containing the heights and roof profiles of all buildings in the Netherlands.

What did we do?

- Coupled satellite and open map data and determined the height of every Dutch building

- Gathered and combined tens of thousands of 'labelled examples' of roof types from various sources

- Trained an AI model to recognize the most common types of roofs

Our model constructed the required dataset, assigning a roof type to each and every building in the Netherlands. With this new data the client can improve their own models. They use these for instance to predict how likely someone is to move in the near future.